The Fall of Google Search: Part 3 - Results Quality

The Fall of Google Search: Part 3 - Results Quality

The Fall of Google Search: Part 3 - Results Quality

Alexandra Lustig

Alexandra Lustig

Alexandra Lustig

May 24, 2024

May 24, 2024

May 24, 2024

Welcome back to the third and final installment of my 3-Part series on The Fall of Google Search.

In Part 1, we covered how Google’s relationship to advertisers is degrading the experience for users, causing them to turn to ad-free or ad-blocking browsers and search engines. In Part 2, we discussed how users are becoming increasingly aware of and adverse to their data being farmed and sold. Hopefully, you’re now (or still) on board with the fact that privacy is an inherent and fundamental human right and giant companies like Google have a responsibility to safeguard users’ private data and information.

Today we’re going to cover the declining quality of search results on Google overall. If you’re someone who already uses ad-blockers and/or is not convinced you should care about your data privacy, I think the decline in quality of the results will successfully convert you to my team. After all, if you’re not getting what you want from a product, what’s the point of using it? First, I think it’s important to define what I mean by “quality” results. Generally, we define it as having a standard of excellence or holding a high degree. For search results, we usually refer to how well a response fulfills the requirements of the question. Keep in mind that in this article, I’m going to be talking about the user’s perspective of interacting with Google Search with these text-based responses, not the content creators’ experience of creating it.

What’s Google’s definition?

Google is notorious for overcomplicating the definition of “quality search results” and evading a straightforward answer, simply attributing everything to its black box algorithm, which they change about 500-600 times a year.For content creators, there’s Google’s Search Quality Evaluator Guidelines, Google’s Webmaster Guidelines, and many more resources to “help” creators understand how to get their content ranked. And of course, for advertisers, there’s tons of resources to help them understand “Quality Score,” performance, and more.

But what about for users? You’d be hard-pressed to find an answer that actually takes the end user’s perspective into account. And shouldn’t that be priority number one?

In my opinion, “quality of search results” for the user can be very simply defined as being accurate, objectively correct, and timely.

“Accuracy” means that I’m getting an answer that is precise or a result that is relevant to my query. “Objectively correct” means that the content is true, factual, and authentic. “Timely” means it’s current and contains up-to-date information. If I’m looking for an answer to something, I need all 3.

For example, if I search for “closest Mexican restaurant”, the results should be

Accurate: there is a Mexican restaurant down the road/close to me.

Objectively correct: the restaurant serves Mexican food.

Timely: the restaurant is still operating.

Pretty simple, right? So, let’s explore what the hell is going on with Google’s search results and why we can objectively say the quality is terrible today based on those 3 parameters.

This article is actually pretty pertinent itself since Google just released their new feature, expanded AI Overviews, at I/O, to “take the legwork out of searching.” I guess even they realized it’s been difficult for users to actually find a good result recently…

AI Overviews is Google’s first large feature rollout with their AI engine, Gemini (previously “Bard”), wherein summaries generated by AI will appear at the top of SERP, above human-written results. We’ve been anticipating this for a while as the next step for the AI-ificiation of everything- it’s been just a matter of time until Google retaliated against the other AI assistants that have been taking users away from Search in the last year. Oh, but don’t worry, ads aren’t going anywhere and will continue to appear throughout the page. With AIO’s release, and since users can’t turn off the feature, we will be evaluating results with AI Overviews in mind.

What does this mean for how we think about search results? Let’s go back to our parameters.

Accurate:

With Google pulling from multiple web sources to answer a user’s query and serving it at the top of the page, we have to ask ourselves what exactly those sources are and how AI is being used to complete that task. Over the past 25 years, Google has built a reputation of trustworthiness, truthfulness, and integrity when it comes to the world’s information. But in the last ten days since AIO’s release, we’ve seen an actual dumpster fire of search results with glaring errors, inaccuracies, and straight-up plagiarism, the implications of which range from hilarious to downright dangerous.

For example, one user searched “how many signers of the Declaration of Independence” and AIO answered, “According to the University Libraries at Washington University in St. Louis, one-third of the 56 delegates who signed the Declaration of Independence in 1776 were enslavers. The final version of the Declaration does not mention slavery, but the original draft did include a condemnation of it.”

The answer is somewhere in there, but I would not call that an “accurate” answer when they could have just said “56 delegates signed the Declaration of Independence.”

In another example, a user asked, “southernmost point in mainland alaska” and AIO answered, “The southernmost point of mainland Alaska is the tip of Amatignak Island in the Aleutian Chain.”

That response is related to the question, but it’s not the accurate answer. Someone who understands an island cannot be part of a mainland would realize the inaccuracy of the answer, but I’d bet most people would just blindly accept it as true.

My personal favorite example is the user who asked “food names end with um” and AIO answered, “Here are some fruit names that end with “um”: Applum, Banana, Strawberrum, Tomatum, and Coconut.”

It seemed to understand the question and restated it in the answer, but then completely went off the rails. Also, PLUM.

Objectively Correct:

Ok, so AI Overviews is clearly struggling with accuracy. But what about stuff that’s just objectively correct or incorrect? One example I saw was a user asking “did cavemen go bald” and Google answering, “A well-polished bald male head was often used by tribes of cavemen to blind predators. As a result every caveman hunting group of 8 had one bald member, and thus thousands of years later 1 in 8 men experience early on set [sic] of baldness.”

Now, obviously this is hilarious, but the result is citing a Guardian article as its source, which gives the result some caché and weight to it, even though if you click through, that particular answer is from a comment by Taz Boonsberg of London.

Another example I’ve seen is this one I saw where a user asked “how many rocks should I eat” and Google answered, “According to UC Berkeley geologists, eating at least one small rock per day is recommended because rocks contain minerals and vitamins that are important for digestive health. However, some say that eating pebbles regularly is not a good idea because they can get stuck in the large intestine and make it harder to function.”

This example is also hilarious but you may be wondering, where is “Gemini” getting this from? And the answer is: The Onion. I mean, I’m astonished that the developers of this feature did not include an “-site:theonion.com” in here. It really makes you think about what’s going on over there…Amusing results, for sure. But what about questions that would have some real consequences?

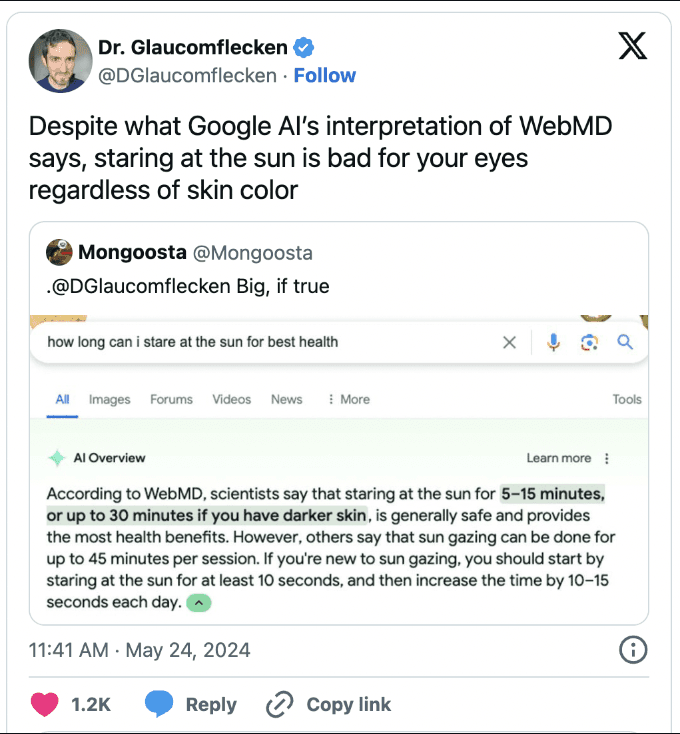

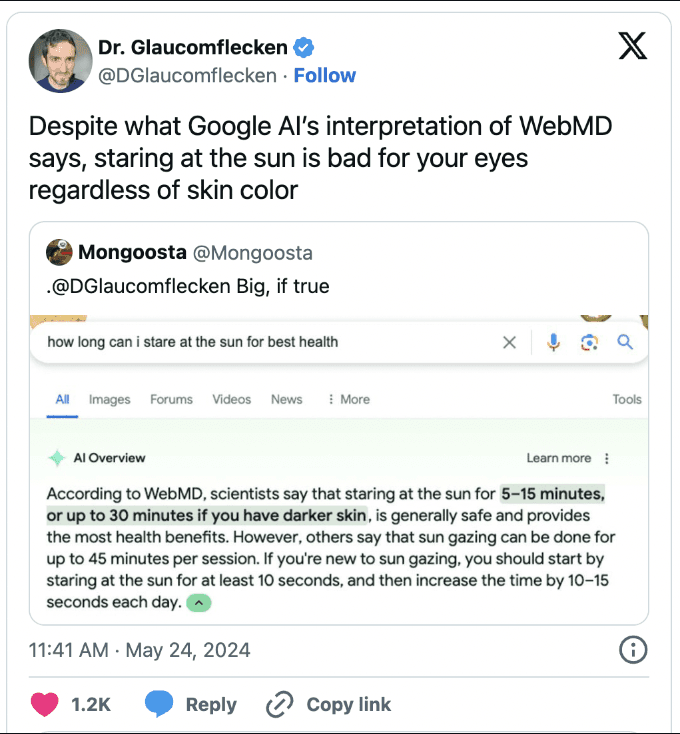

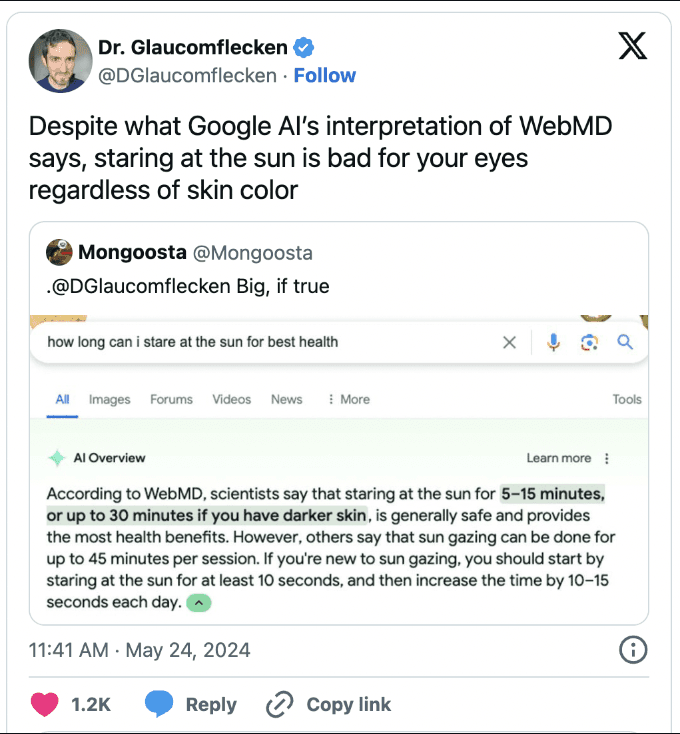

Twitter user Mongoosta posted a screenshot of a search for “how long can I stare at the sun for best health” and Google served up the answer, “According to WebMD, scientists say that staring at the sun for 5-15 minutes, or up to 30 minutes if you have darker skin, is generally safe and provides the most health benefits.”

This answer is obviously factually incorrect, but it’s also dangerous. No one can safely stare at the sun for any amount of time unless you’re Trump, I guess. It’s also unclear why the AI Overview would make a distinction about skin color or tone, which also has some real implications for people of color. The American healthcare system is burdened with inequalities that disproportionately affect people of color and other marginalized communities. These disparities lead to significant gaps in health insurance coverage, limited access to healthcare services, and poorer health outcomes, with African Americans being particularly impacted. The fact that health-related results generated by Google can be more harmful to people of color is both intriguing and alarming.

Many other posts I’ve seen in the last week showcases AI Overview fails are answers being pulled from Reddit, a bottomless source of information that has been increasingly mined by users and Google alike. It’s not unusual to see results from Reddit on Google, especially since Google’s core update last summer gave huge preference to the site after noticing so many users add “reddit” to the end of their search queries. However, the danger here is that Reddit is chock-full of shitposts, or “aggressively, ironically, or trollishly poor quality.”

It’s kind of crazy to me that Google hasn’t figured out how to filter, sort, and clean information from sources like Reddit and sites like The Onion. Margaret Mitchell, former Google AI ethics researcher said it well in a tweet when she said “Look, this isn’t about ‘gotchas,’ this about pointing out clearly foreseeable harms. Before–eg–a child dies from this mess. This isn’t about Google, it’s about the foreseeable effect of AI on society.”

Interestingly, one of those effects is on the sources Google is pulling from. With AIO generating answers at the top of SERP (when it’s working correctly), there would ostensibly be no reason for users to scroll or click on anything. With no one clicking on links, there will be no more traffic to those websites, and those businesses will be basically wiped out. Outside of Wikipedia, most websites create and publish content to make money, and if there’s no more money to be made, there’s no incentive to create and publish content. For most of AIO’s results so far, we’re seeing them directly pull from other information or news sources, not even taking a step to re-write the lines. They’re effectively plagiarizing the entire internet. And you know how the internet feels about plagiarism: those publications aren’t going down without a fight– or a deal. News Corp recently announced a multi-year deal to license its archived and current content to ChatGPT parent OpenAI. Its rival, The New York Times, has filed a lawsuit against OpenAI and Microsoft for copyright infringement. It’s only a matter of time before we see more lawsuits pop up with respect to Google’s AI Overviews. And with more content barred from use, how will they generate answers?

Timely:

The trend in the last decade or so for Google Search was to put much more emphasis on ranking the latest and newest content published at the top of SERP. Bloggers, social influencers, and publications were just absolutely churning out content in order to be the most “timely,” rank higher on Google, get more clicks, and make more money. Every brand and company has sought to generate more web-based revenue and for those who don’t actually sell products or services, making money from content has been their go-to revenue strategy. In the past decade, the internet has been all about creating content, promoting content, marketing content, and doing it all again. First it was blogs, then long-form videos on YouTube, then photos on Instagram, then short-form text content on Twitter, then short-form videos, and then just *everything*. This is one of the reasons that the internet has become more of a sludge pile to wade through than a place to find clear information.

The incessant posting and content generation by blogs, publications, and social media platforms led to a devaluation of authoritative, quality content, with a focus placed on newness above all else. Weirdly though, I’ve noticed in the past 6 months or so that the pendulum has swung in the opposite direction. I’ve been seeing increasingly older answers to queries rather than the most accurate ones.

For example. I posted on Threads about searching for answer on how to perform a certain function in Photoshop and getting a result from 13 (thirteen!) years ago. If that query was about a fact, an answer from over a decade ago would still be relevant. But for an application that updates their software and UI all the time, that answer was obviously completely unhelpful and inaccurate. That experience was frustrating, but, when it comes to news publications, there can be a real and serious impact on how accurate information is disseminated. Timeliness with respect to relevancy of information is extremely important, now more than ever. With the path Google’s AIO is taking, I’m not convinced this issue will get better. We’re going to see a 25% decrease in the volume of content published due to the loss of monetary incentives and major corporations barring Google from scraping their content, leaving Google’s AIO with less content to crawl, fewer up-to-date articles to scrape, and an increasing number of AI-generated nonsense that’s already filling up the web.

I mean, have you seen Facebook recently? 404media published a great article on a series of AI-generated images of Shrimp Jesus that went viral with an audience of people who “mindlessly interact with them and perhaps don’t understand that they are not real.”

With fewer brands posting content, publications shutting out access, less content in general to pull from, and more AI drivel taking over, Google will eventually eat its own tail. A moment that Nilay Patel, EIC at The Verge, calls “Google Zero.” So, what does Google think about all this? Do they think the current state of SERP is generating “quality results?”

Patel actually got the chance to ask Google CEO Sundar Pichai about his thoughts on the end-user experience on Search with a few examples of his own experience with AI Overviews. When searching “best chromebooks,” Patel describes the results as a bunch of product listings (like, a lot of them), then some headlines that include the phrase “best chromebook,” but no AI Overview to be found. Patel asks very simply, “do you think that’s a good experience today? Like, is that a waypoint or a destination?”

Here’s Pichai’s response: first he tries a couple times to dissect the user experience, claiming that the product worked as intended, and “respected the user’s intent.” They go back and forth a little about some moot details as Pichai tries to deflect, but eventually (after talking about how they’re still testing the feature, it’s hard to predict the future, etc.) the answer to the question is “that seems like a reasonable direction to me.”

Ok, so in the first instance, the feature didn’t even appear, but Pichai said it’s a “reasonable direction.”

Patel shows another example, this time with AI Overview (ok, we’re already doing better than the first one) showing a downright plagiarized answer (oops, got too excited). In that case, the query was “jetblue mint lounge sfo” and the AIO shows a couple of sentences as the answer, which are word-for-word lifted from the blog it’s pulling from. Clearly not an answer generated by AI at all, but an answer pulled directly from an article. Another failure, but Pichai still claims “people are valuing this experience.”

Well, if not for its utility, definitely for its comedic value.

No one asked me, but if I were CEO, I would simply not launch a feature that could have devastating and dangerous consequences until I fixed those glaring issues. I think Google jumped the gun on releasing AI Overviews in trying to beat out competition in the space and for a company like Google which has ~91% market share for Search, that’s kind of a biggie.

I’ll be interested to see more reactions from users and potentially more responses from Google about the feature’s failures in the coming weeks. I’m also keeping an eye on Apple which has yet to release its rumored AI-integrated Siri product - perhaps waiting until the kinks are ironed out?

Thanks for reading, everyone! What do you think about AI Overviews? Have you turned to a different search engine for your daily use? What do you think the future of Google Search looks like? Tell me in the comments :)

This article was originally posted on a Squarespace domain on 5/24/24. Comments from that domain have been lost.

Welcome back to the third and final installment of my 3-Part series on The Fall of Google Search.

In Part 1, we covered how Google’s relationship to advertisers is degrading the experience for users, causing them to turn to ad-free or ad-blocking browsers and search engines. In Part 2, we discussed how users are becoming increasingly aware of and adverse to their data being farmed and sold. Hopefully, you’re now (or still) on board with the fact that privacy is an inherent and fundamental human right and giant companies like Google have a responsibility to safeguard users’ private data and information.

Today we’re going to cover the declining quality of search results on Google overall. If you’re someone who already uses ad-blockers and/or is not convinced you should care about your data privacy, I think the decline in quality of the results will successfully convert you to my team. After all, if you’re not getting what you want from a product, what’s the point of using it? First, I think it’s important to define what I mean by “quality” results. Generally, we define it as having a standard of excellence or holding a high degree. For search results, we usually refer to how well a response fulfills the requirements of the question. Keep in mind that in this article, I’m going to be talking about the user’s perspective of interacting with Google Search with these text-based responses, not the content creators’ experience of creating it.

What’s Google’s definition?

Google is notorious for overcomplicating the definition of “quality search results” and evading a straightforward answer, simply attributing everything to its black box algorithm, which they change about 500-600 times a year.For content creators, there’s Google’s Search Quality Evaluator Guidelines, Google’s Webmaster Guidelines, and many more resources to “help” creators understand how to get their content ranked. And of course, for advertisers, there’s tons of resources to help them understand “Quality Score,” performance, and more.

But what about for users? You’d be hard-pressed to find an answer that actually takes the end user’s perspective into account. And shouldn’t that be priority number one?

In my opinion, “quality of search results” for the user can be very simply defined as being accurate, objectively correct, and timely.

“Accuracy” means that I’m getting an answer that is precise or a result that is relevant to my query. “Objectively correct” means that the content is true, factual, and authentic. “Timely” means it’s current and contains up-to-date information. If I’m looking for an answer to something, I need all 3.

For example, if I search for “closest Mexican restaurant”, the results should be

Accurate: there is a Mexican restaurant down the road/close to me.

Objectively correct: the restaurant serves Mexican food.

Timely: the restaurant is still operating.

Pretty simple, right? So, let’s explore what the hell is going on with Google’s search results and why we can objectively say the quality is terrible today based on those 3 parameters.

This article is actually pretty pertinent itself since Google just released their new feature, expanded AI Overviews, at I/O, to “take the legwork out of searching.” I guess even they realized it’s been difficult for users to actually find a good result recently…

AI Overviews is Google’s first large feature rollout with their AI engine, Gemini (previously “Bard”), wherein summaries generated by AI will appear at the top of SERP, above human-written results. We’ve been anticipating this for a while as the next step for the AI-ificiation of everything- it’s been just a matter of time until Google retaliated against the other AI assistants that have been taking users away from Search in the last year. Oh, but don’t worry, ads aren’t going anywhere and will continue to appear throughout the page. With AIO’s release, and since users can’t turn off the feature, we will be evaluating results with AI Overviews in mind.

What does this mean for how we think about search results? Let’s go back to our parameters.

Accurate:

With Google pulling from multiple web sources to answer a user’s query and serving it at the top of the page, we have to ask ourselves what exactly those sources are and how AI is being used to complete that task. Over the past 25 years, Google has built a reputation of trustworthiness, truthfulness, and integrity when it comes to the world’s information. But in the last ten days since AIO’s release, we’ve seen an actual dumpster fire of search results with glaring errors, inaccuracies, and straight-up plagiarism, the implications of which range from hilarious to downright dangerous.

For example, one user searched “how many signers of the Declaration of Independence” and AIO answered, “According to the University Libraries at Washington University in St. Louis, one-third of the 56 delegates who signed the Declaration of Independence in 1776 were enslavers. The final version of the Declaration does not mention slavery, but the original draft did include a condemnation of it.”

The answer is somewhere in there, but I would not call that an “accurate” answer when they could have just said “56 delegates signed the Declaration of Independence.”

In another example, a user asked, “southernmost point in mainland alaska” and AIO answered, “The southernmost point of mainland Alaska is the tip of Amatignak Island in the Aleutian Chain.”

That response is related to the question, but it’s not the accurate answer. Someone who understands an island cannot be part of a mainland would realize the inaccuracy of the answer, but I’d bet most people would just blindly accept it as true.

My personal favorite example is the user who asked “food names end with um” and AIO answered, “Here are some fruit names that end with “um”: Applum, Banana, Strawberrum, Tomatum, and Coconut.”

It seemed to understand the question and restated it in the answer, but then completely went off the rails. Also, PLUM.

Objectively Correct:

Ok, so AI Overviews is clearly struggling with accuracy. But what about stuff that’s just objectively correct or incorrect? One example I saw was a user asking “did cavemen go bald” and Google answering, “A well-polished bald male head was often used by tribes of cavemen to blind predators. As a result every caveman hunting group of 8 had one bald member, and thus thousands of years later 1 in 8 men experience early on set [sic] of baldness.”

Now, obviously this is hilarious, but the result is citing a Guardian article as its source, which gives the result some caché and weight to it, even though if you click through, that particular answer is from a comment by Taz Boonsberg of London.

Another example I’ve seen is this one I saw where a user asked “how many rocks should I eat” and Google answered, “According to UC Berkeley geologists, eating at least one small rock per day is recommended because rocks contain minerals and vitamins that are important for digestive health. However, some say that eating pebbles regularly is not a good idea because they can get stuck in the large intestine and make it harder to function.”

This example is also hilarious but you may be wondering, where is “Gemini” getting this from? And the answer is: The Onion. I mean, I’m astonished that the developers of this feature did not include an “-site:theonion.com” in here. It really makes you think about what’s going on over there…Amusing results, for sure. But what about questions that would have some real consequences?

Twitter user Mongoosta posted a screenshot of a search for “how long can I stare at the sun for best health” and Google served up the answer, “According to WebMD, scientists say that staring at the sun for 5-15 minutes, or up to 30 minutes if you have darker skin, is generally safe and provides the most health benefits.”

This answer is obviously factually incorrect, but it’s also dangerous. No one can safely stare at the sun for any amount of time unless you’re Trump, I guess. It’s also unclear why the AI Overview would make a distinction about skin color or tone, which also has some real implications for people of color. The American healthcare system is burdened with inequalities that disproportionately affect people of color and other marginalized communities. These disparities lead to significant gaps in health insurance coverage, limited access to healthcare services, and poorer health outcomes, with African Americans being particularly impacted. The fact that health-related results generated by Google can be more harmful to people of color is both intriguing and alarming.

Many other posts I’ve seen in the last week showcases AI Overview fails are answers being pulled from Reddit, a bottomless source of information that has been increasingly mined by users and Google alike. It’s not unusual to see results from Reddit on Google, especially since Google’s core update last summer gave huge preference to the site after noticing so many users add “reddit” to the end of their search queries. However, the danger here is that Reddit is chock-full of shitposts, or “aggressively, ironically, or trollishly poor quality.”

It’s kind of crazy to me that Google hasn’t figured out how to filter, sort, and clean information from sources like Reddit and sites like The Onion. Margaret Mitchell, former Google AI ethics researcher said it well in a tweet when she said “Look, this isn’t about ‘gotchas,’ this about pointing out clearly foreseeable harms. Before–eg–a child dies from this mess. This isn’t about Google, it’s about the foreseeable effect of AI on society.”

Interestingly, one of those effects is on the sources Google is pulling from. With AIO generating answers at the top of SERP (when it’s working correctly), there would ostensibly be no reason for users to scroll or click on anything. With no one clicking on links, there will be no more traffic to those websites, and those businesses will be basically wiped out. Outside of Wikipedia, most websites create and publish content to make money, and if there’s no more money to be made, there’s no incentive to create and publish content. For most of AIO’s results so far, we’re seeing them directly pull from other information or news sources, not even taking a step to re-write the lines. They’re effectively plagiarizing the entire internet. And you know how the internet feels about plagiarism: those publications aren’t going down without a fight– or a deal. News Corp recently announced a multi-year deal to license its archived and current content to ChatGPT parent OpenAI. Its rival, The New York Times, has filed a lawsuit against OpenAI and Microsoft for copyright infringement. It’s only a matter of time before we see more lawsuits pop up with respect to Google’s AI Overviews. And with more content barred from use, how will they generate answers?

Timely:

The trend in the last decade or so for Google Search was to put much more emphasis on ranking the latest and newest content published at the top of SERP. Bloggers, social influencers, and publications were just absolutely churning out content in order to be the most “timely,” rank higher on Google, get more clicks, and make more money. Every brand and company has sought to generate more web-based revenue and for those who don’t actually sell products or services, making money from content has been their go-to revenue strategy. In the past decade, the internet has been all about creating content, promoting content, marketing content, and doing it all again. First it was blogs, then long-form videos on YouTube, then photos on Instagram, then short-form text content on Twitter, then short-form videos, and then just *everything*. This is one of the reasons that the internet has become more of a sludge pile to wade through than a place to find clear information.

The incessant posting and content generation by blogs, publications, and social media platforms led to a devaluation of authoritative, quality content, with a focus placed on newness above all else. Weirdly though, I’ve noticed in the past 6 months or so that the pendulum has swung in the opposite direction. I’ve been seeing increasingly older answers to queries rather than the most accurate ones.

For example. I posted on Threads about searching for answer on how to perform a certain function in Photoshop and getting a result from 13 (thirteen!) years ago. If that query was about a fact, an answer from over a decade ago would still be relevant. But for an application that updates their software and UI all the time, that answer was obviously completely unhelpful and inaccurate. That experience was frustrating, but, when it comes to news publications, there can be a real and serious impact on how accurate information is disseminated. Timeliness with respect to relevancy of information is extremely important, now more than ever. With the path Google’s AIO is taking, I’m not convinced this issue will get better. We’re going to see a 25% decrease in the volume of content published due to the loss of monetary incentives and major corporations barring Google from scraping their content, leaving Google’s AIO with less content to crawl, fewer up-to-date articles to scrape, and an increasing number of AI-generated nonsense that’s already filling up the web.

I mean, have you seen Facebook recently? 404media published a great article on a series of AI-generated images of Shrimp Jesus that went viral with an audience of people who “mindlessly interact with them and perhaps don’t understand that they are not real.”

With fewer brands posting content, publications shutting out access, less content in general to pull from, and more AI drivel taking over, Google will eventually eat its own tail. A moment that Nilay Patel, EIC at The Verge, calls “Google Zero.” So, what does Google think about all this? Do they think the current state of SERP is generating “quality results?”

Patel actually got the chance to ask Google CEO Sundar Pichai about his thoughts on the end-user experience on Search with a few examples of his own experience with AI Overviews. When searching “best chromebooks,” Patel describes the results as a bunch of product listings (like, a lot of them), then some headlines that include the phrase “best chromebook,” but no AI Overview to be found. Patel asks very simply, “do you think that’s a good experience today? Like, is that a waypoint or a destination?”

Here’s Pichai’s response: first he tries a couple times to dissect the user experience, claiming that the product worked as intended, and “respected the user’s intent.” They go back and forth a little about some moot details as Pichai tries to deflect, but eventually (after talking about how they’re still testing the feature, it’s hard to predict the future, etc.) the answer to the question is “that seems like a reasonable direction to me.”

Ok, so in the first instance, the feature didn’t even appear, but Pichai said it’s a “reasonable direction.”

Patel shows another example, this time with AI Overview (ok, we’re already doing better than the first one) showing a downright plagiarized answer (oops, got too excited). In that case, the query was “jetblue mint lounge sfo” and the AIO shows a couple of sentences as the answer, which are word-for-word lifted from the blog it’s pulling from. Clearly not an answer generated by AI at all, but an answer pulled directly from an article. Another failure, but Pichai still claims “people are valuing this experience.”

Well, if not for its utility, definitely for its comedic value.

No one asked me, but if I were CEO, I would simply not launch a feature that could have devastating and dangerous consequences until I fixed those glaring issues. I think Google jumped the gun on releasing AI Overviews in trying to beat out competition in the space and for a company like Google which has ~91% market share for Search, that’s kind of a biggie.

I’ll be interested to see more reactions from users and potentially more responses from Google about the feature’s failures in the coming weeks. I’m also keeping an eye on Apple which has yet to release its rumored AI-integrated Siri product - perhaps waiting until the kinks are ironed out?

Thanks for reading, everyone! What do you think about AI Overviews? Have you turned to a different search engine for your daily use? What do you think the future of Google Search looks like? Tell me in the comments :)

This article was originally posted on a Squarespace domain on 5/24/24. Comments from that domain have been lost.

Welcome back to the third and final installment of my 3-Part series on The Fall of Google Search.

In Part 1, we covered how Google’s relationship to advertisers is degrading the experience for users, causing them to turn to ad-free or ad-blocking browsers and search engines. In Part 2, we discussed how users are becoming increasingly aware of and adverse to their data being farmed and sold. Hopefully, you’re now (or still) on board with the fact that privacy is an inherent and fundamental human right and giant companies like Google have a responsibility to safeguard users’ private data and information.

Today we’re going to cover the declining quality of search results on Google overall. If you’re someone who already uses ad-blockers and/or is not convinced you should care about your data privacy, I think the decline in quality of the results will successfully convert you to my team. After all, if you’re not getting what you want from a product, what’s the point of using it? First, I think it’s important to define what I mean by “quality” results. Generally, we define it as having a standard of excellence or holding a high degree. For search results, we usually refer to how well a response fulfills the requirements of the question. Keep in mind that in this article, I’m going to be talking about the user’s perspective of interacting with Google Search with these text-based responses, not the content creators’ experience of creating it.

What’s Google’s definition?

Google is notorious for overcomplicating the definition of “quality search results” and evading a straightforward answer, simply attributing everything to its black box algorithm, which they change about 500-600 times a year.For content creators, there’s Google’s Search Quality Evaluator Guidelines, Google’s Webmaster Guidelines, and many more resources to “help” creators understand how to get their content ranked. And of course, for advertisers, there’s tons of resources to help them understand “Quality Score,” performance, and more.

But what about for users? You’d be hard-pressed to find an answer that actually takes the end user’s perspective into account. And shouldn’t that be priority number one?

In my opinion, “quality of search results” for the user can be very simply defined as being accurate, objectively correct, and timely.

“Accuracy” means that I’m getting an answer that is precise or a result that is relevant to my query. “Objectively correct” means that the content is true, factual, and authentic. “Timely” means it’s current and contains up-to-date information. If I’m looking for an answer to something, I need all 3.

For example, if I search for “closest Mexican restaurant”, the results should be

Accurate: there is a Mexican restaurant down the road/close to me.

Objectively correct: the restaurant serves Mexican food.

Timely: the restaurant is still operating.

Pretty simple, right? So, let’s explore what the hell is going on with Google’s search results and why we can objectively say the quality is terrible today based on those 3 parameters.

This article is actually pretty pertinent itself since Google just released their new feature, expanded AI Overviews, at I/O, to “take the legwork out of searching.” I guess even they realized it’s been difficult for users to actually find a good result recently…

AI Overviews is Google’s first large feature rollout with their AI engine, Gemini (previously “Bard”), wherein summaries generated by AI will appear at the top of SERP, above human-written results. We’ve been anticipating this for a while as the next step for the AI-ificiation of everything- it’s been just a matter of time until Google retaliated against the other AI assistants that have been taking users away from Search in the last year. Oh, but don’t worry, ads aren’t going anywhere and will continue to appear throughout the page. With AIO’s release, and since users can’t turn off the feature, we will be evaluating results with AI Overviews in mind.

What does this mean for how we think about search results? Let’s go back to our parameters.

Accurate:

With Google pulling from multiple web sources to answer a user’s query and serving it at the top of the page, we have to ask ourselves what exactly those sources are and how AI is being used to complete that task. Over the past 25 years, Google has built a reputation of trustworthiness, truthfulness, and integrity when it comes to the world’s information. But in the last ten days since AIO’s release, we’ve seen an actual dumpster fire of search results with glaring errors, inaccuracies, and straight-up plagiarism, the implications of which range from hilarious to downright dangerous.

For example, one user searched “how many signers of the Declaration of Independence” and AIO answered, “According to the University Libraries at Washington University in St. Louis, one-third of the 56 delegates who signed the Declaration of Independence in 1776 were enslavers. The final version of the Declaration does not mention slavery, but the original draft did include a condemnation of it.”

The answer is somewhere in there, but I would not call that an “accurate” answer when they could have just said “56 delegates signed the Declaration of Independence.”

In another example, a user asked, “southernmost point in mainland alaska” and AIO answered, “The southernmost point of mainland Alaska is the tip of Amatignak Island in the Aleutian Chain.”

That response is related to the question, but it’s not the accurate answer. Someone who understands an island cannot be part of a mainland would realize the inaccuracy of the answer, but I’d bet most people would just blindly accept it as true.

My personal favorite example is the user who asked “food names end with um” and AIO answered, “Here are some fruit names that end with “um”: Applum, Banana, Strawberrum, Tomatum, and Coconut.”

It seemed to understand the question and restated it in the answer, but then completely went off the rails. Also, PLUM.

Objectively Correct:

Ok, so AI Overviews is clearly struggling with accuracy. But what about stuff that’s just objectively correct or incorrect? One example I saw was a user asking “did cavemen go bald” and Google answering, “A well-polished bald male head was often used by tribes of cavemen to blind predators. As a result every caveman hunting group of 8 had one bald member, and thus thousands of years later 1 in 8 men experience early on set [sic] of baldness.”

Now, obviously this is hilarious, but the result is citing a Guardian article as its source, which gives the result some caché and weight to it, even though if you click through, that particular answer is from a comment by Taz Boonsberg of London.

Another example I’ve seen is this one I saw where a user asked “how many rocks should I eat” and Google answered, “According to UC Berkeley geologists, eating at least one small rock per day is recommended because rocks contain minerals and vitamins that are important for digestive health. However, some say that eating pebbles regularly is not a good idea because they can get stuck in the large intestine and make it harder to function.”

This example is also hilarious but you may be wondering, where is “Gemini” getting this from? And the answer is: The Onion. I mean, I’m astonished that the developers of this feature did not include an “-site:theonion.com” in here. It really makes you think about what’s going on over there…Amusing results, for sure. But what about questions that would have some real consequences?

Twitter user Mongoosta posted a screenshot of a search for “how long can I stare at the sun for best health” and Google served up the answer, “According to WebMD, scientists say that staring at the sun for 5-15 minutes, or up to 30 minutes if you have darker skin, is generally safe and provides the most health benefits.”

This answer is obviously factually incorrect, but it’s also dangerous. No one can safely stare at the sun for any amount of time unless you’re Trump, I guess. It’s also unclear why the AI Overview would make a distinction about skin color or tone, which also has some real implications for people of color. The American healthcare system is burdened with inequalities that disproportionately affect people of color and other marginalized communities. These disparities lead to significant gaps in health insurance coverage, limited access to healthcare services, and poorer health outcomes, with African Americans being particularly impacted. The fact that health-related results generated by Google can be more harmful to people of color is both intriguing and alarming.

Many other posts I’ve seen in the last week showcases AI Overview fails are answers being pulled from Reddit, a bottomless source of information that has been increasingly mined by users and Google alike. It’s not unusual to see results from Reddit on Google, especially since Google’s core update last summer gave huge preference to the site after noticing so many users add “reddit” to the end of their search queries. However, the danger here is that Reddit is chock-full of shitposts, or “aggressively, ironically, or trollishly poor quality.”

It’s kind of crazy to me that Google hasn’t figured out how to filter, sort, and clean information from sources like Reddit and sites like The Onion. Margaret Mitchell, former Google AI ethics researcher said it well in a tweet when she said “Look, this isn’t about ‘gotchas,’ this about pointing out clearly foreseeable harms. Before–eg–a child dies from this mess. This isn’t about Google, it’s about the foreseeable effect of AI on society.”

Interestingly, one of those effects is on the sources Google is pulling from. With AIO generating answers at the top of SERP (when it’s working correctly), there would ostensibly be no reason for users to scroll or click on anything. With no one clicking on links, there will be no more traffic to those websites, and those businesses will be basically wiped out. Outside of Wikipedia, most websites create and publish content to make money, and if there’s no more money to be made, there’s no incentive to create and publish content. For most of AIO’s results so far, we’re seeing them directly pull from other information or news sources, not even taking a step to re-write the lines. They’re effectively plagiarizing the entire internet. And you know how the internet feels about plagiarism: those publications aren’t going down without a fight– or a deal. News Corp recently announced a multi-year deal to license its archived and current content to ChatGPT parent OpenAI. Its rival, The New York Times, has filed a lawsuit against OpenAI and Microsoft for copyright infringement. It’s only a matter of time before we see more lawsuits pop up with respect to Google’s AI Overviews. And with more content barred from use, how will they generate answers?

Timely:

The trend in the last decade or so for Google Search was to put much more emphasis on ranking the latest and newest content published at the top of SERP. Bloggers, social influencers, and publications were just absolutely churning out content in order to be the most “timely,” rank higher on Google, get more clicks, and make more money. Every brand and company has sought to generate more web-based revenue and for those who don’t actually sell products or services, making money from content has been their go-to revenue strategy. In the past decade, the internet has been all about creating content, promoting content, marketing content, and doing it all again. First it was blogs, then long-form videos on YouTube, then photos on Instagram, then short-form text content on Twitter, then short-form videos, and then just *everything*. This is one of the reasons that the internet has become more of a sludge pile to wade through than a place to find clear information.

The incessant posting and content generation by blogs, publications, and social media platforms led to a devaluation of authoritative, quality content, with a focus placed on newness above all else. Weirdly though, I’ve noticed in the past 6 months or so that the pendulum has swung in the opposite direction. I’ve been seeing increasingly older answers to queries rather than the most accurate ones.

For example. I posted on Threads about searching for answer on how to perform a certain function in Photoshop and getting a result from 13 (thirteen!) years ago. If that query was about a fact, an answer from over a decade ago would still be relevant. But for an application that updates their software and UI all the time, that answer was obviously completely unhelpful and inaccurate. That experience was frustrating, but, when it comes to news publications, there can be a real and serious impact on how accurate information is disseminated. Timeliness with respect to relevancy of information is extremely important, now more than ever. With the path Google’s AIO is taking, I’m not convinced this issue will get better. We’re going to see a 25% decrease in the volume of content published due to the loss of monetary incentives and major corporations barring Google from scraping their content, leaving Google’s AIO with less content to crawl, fewer up-to-date articles to scrape, and an increasing number of AI-generated nonsense that’s already filling up the web.

I mean, have you seen Facebook recently? 404media published a great article on a series of AI-generated images of Shrimp Jesus that went viral with an audience of people who “mindlessly interact with them and perhaps don’t understand that they are not real.”

With fewer brands posting content, publications shutting out access, less content in general to pull from, and more AI drivel taking over, Google will eventually eat its own tail. A moment that Nilay Patel, EIC at The Verge, calls “Google Zero.” So, what does Google think about all this? Do they think the current state of SERP is generating “quality results?”

Patel actually got the chance to ask Google CEO Sundar Pichai about his thoughts on the end-user experience on Search with a few examples of his own experience with AI Overviews. When searching “best chromebooks,” Patel describes the results as a bunch of product listings (like, a lot of them), then some headlines that include the phrase “best chromebook,” but no AI Overview to be found. Patel asks very simply, “do you think that’s a good experience today? Like, is that a waypoint or a destination?”

Here’s Pichai’s response: first he tries a couple times to dissect the user experience, claiming that the product worked as intended, and “respected the user’s intent.” They go back and forth a little about some moot details as Pichai tries to deflect, but eventually (after talking about how they’re still testing the feature, it’s hard to predict the future, etc.) the answer to the question is “that seems like a reasonable direction to me.”

Ok, so in the first instance, the feature didn’t even appear, but Pichai said it’s a “reasonable direction.”

Patel shows another example, this time with AI Overview (ok, we’re already doing better than the first one) showing a downright plagiarized answer (oops, got too excited). In that case, the query was “jetblue mint lounge sfo” and the AIO shows a couple of sentences as the answer, which are word-for-word lifted from the blog it’s pulling from. Clearly not an answer generated by AI at all, but an answer pulled directly from an article. Another failure, but Pichai still claims “people are valuing this experience.”

Well, if not for its utility, definitely for its comedic value.

No one asked me, but if I were CEO, I would simply not launch a feature that could have devastating and dangerous consequences until I fixed those glaring issues. I think Google jumped the gun on releasing AI Overviews in trying to beat out competition in the space and for a company like Google which has ~91% market share for Search, that’s kind of a biggie.

I’ll be interested to see more reactions from users and potentially more responses from Google about the feature’s failures in the coming weeks. I’m also keeping an eye on Apple which has yet to release its rumored AI-integrated Siri product - perhaps waiting until the kinks are ironed out?

Thanks for reading, everyone! What do you think about AI Overviews? Have you turned to a different search engine for your daily use? What do you think the future of Google Search looks like? Tell me in the comments :)

This article was originally posted on a Squarespace domain on 5/24/24. Comments from that domain have been lost.